Categorization AI

2023-2024Overview

Bench was the largest bookkeeping service for small businesses in America. I led the redesign of Bench’s transaction categorization, transforming a high-touch service into a scalable, AI-assisted system. By introducing conversational AI and smart bulk-categorization, we delivered books 22% faster and maintained 95%+ accuracy, while giving customers more control, trust, and time savings.

Company

Bench Accounting

Role

Principal Product Designer

End-to-end UX, UI, conversational design, service design, research

tl;dr

Problem

Bench's categorization relied in large part on bookkeeping specialists manually reviewing transactions. This created bottlenecks, delays, and errors. Customer felt frustrated with slow books and limited control.

Solution

- Categorization Assistant: Conversational AI guiding complex categorizations and verifications in real time

- Similar Transactions: Smart grouping to reduce repetitive categorizations

- Service redesign: Redesigned system for more customer agency and redefined bookkeeper/specialist roles for smooth AI-human handoffs

Results

230,000

transactions categorized via AI conversations in 3 months (equivalent to 30,000 hours of human bookkeeping)

67%

time savings. Customers cut time spent categorizing from ~30 min to ~10 (per customer feedback)

22%

increase in monthly book completions

95%+

categorization accuracy

0.1%

dropoff rate indicating strong customer trust and adoption

Manual categorization wasn't scalable

By 2023, categorization at Bench had become a bottleneck. A small group of specialists were handling tens of thousands of ambiguous transactions each month, often without full customer context. Customers could only pick from limited pre-approved categories or leave notes for review, then wait days for follow-up. This created delays and errors, customer frustration, and workarounds. Some customers even build parallel spreadsheets, signaling an erosion of product value.

At the same time, trust was non-negotiable. Customers chose Bench for tax accuracy, and operations worried that opening up categorization risked compliance errors.

AI-assisted, human-verified

As Principal Product Designer, I redesigned Bench's transaction categorization system, balancing automation with trust. This was a key initiative to scale the service and enable lower-cost tiers.

Categorization Assistant

- AI assistant helps customers categorize complex transactions in real-time

- Clarifying questions ensure tax compliance, with bookkeeper review in background

- Immediate results reduce customer wait times from days to minutes

Similar Transactions

- Surface groups of similar transactions for customers to action at once

- Trains algorithm to auto-categorize future transactions

- Checkbox UI allows easy review and adjustment

Service redesign

- Redefined workflows: AI handles first pass; specialists review exceptions; bookkeepers ensure final accuracy

- Clear in-flow messaging reassures bookkeeper oversight and escalation paths

Building the AI-integrated categorization system

My role spanned end-to-end execution across customer research, prototyping, conversation design, service design, and collaboration with PMs, engineers, bookkeepers, and AI specialists. I redesigned both customer and internal workflows.

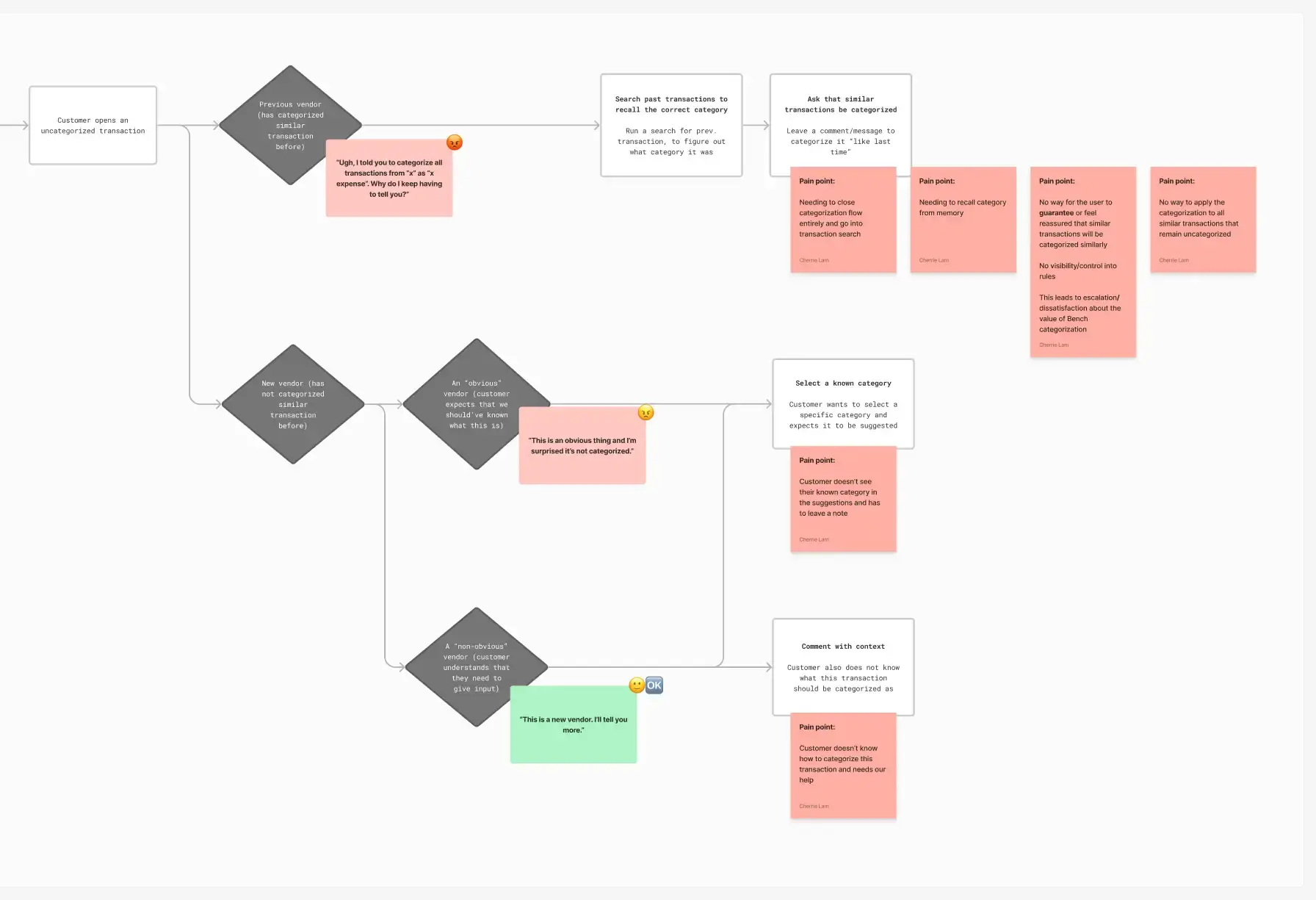

Discovery

Conducted customer and bookkeeper interviews to uncover pain points

Analyzed uncategorized transaction data to identify automation opportunities

Reviewed customer chat transcripts to surface trust gaps

Conversation design

Partnered with product, operations, and engineering to build AI-powered agent using licensed GPT models within Bench's secure infrastructure:

- Ledger mapping: Worked with ops to map transaction types to ledgers (categories) with example transactions, exception cases, and follow-up questions

- Prompt design: Worked with AI/ML engineers to test and refine how the assistant would generate responses

- Escalation rules: Identified ambiguous cases and error handling that escalated to human review.

- Voice & tone: Worked with brand/marketing to give the assistant a clear, supportive tone and to design in-flow messaging that reassured customers their bookkeeper would still review categorizations.

Service blueprinting

Collaborated with operations teams to rethink how AI, specialists, and bookkeepers worked together behind the scenes. This resulted in redefined roles, where AI handled first-pass categorization; specialists reviewed edge cases/escalations; bookkeepers oversaw overall categorization consistency and quality reviews. I also mapped escalation paths, which ensured unclear or sensitive transactions were routed back to human specialists.

Design & prototyping

- Scoping MVP: Worked with Product & Eng to define MVP vs. future vision; prioritizing launch-critical features while mapping long-term improvements

- Prototyping flows: Tested variations to validate usability

- Technical collaboration: Worked with eng to scope what the models could support, simplifying designs to ship faster

Testing

- Internal testing: Ran early prototypes with bookkeepers, using real transactions to evaluate the AI’s suggestions. Confusing or inaccurate outputs were logged to identify gaps in training data and refine prompts

- Alpha testing: Conducted limited tests with a small group of customers to observe initial interactions and surface any usability or trust issues

- Beta testing & segmented rollout: Incrementally rolled out the feature to larger segments of customers in beta, allowing us to monitor behaviour, track adoption, and collect qualitative feedback. This helped us catch edge cases, tune responses and flows, and adjust before broader releases

Constraints

Tech: Accuracy of AI/ML models required iterative refinement

Organizational: Balancing executive enthusiasm for ChatGPT-style AI interactions with practical user needs

Resources: Company restructuring/financial pressures accelerated AI delivery timelines, required shipping MVPs before polish

Trust: System, design, and messaging needed to uphold accuracy and emphasize bookkeeper oversight

Categorization Assistant

Before

For unclear/complex categorizations, customers had to leave notes to bookkeepers, leading to slow turnaround and forgotten context.

After

Conversational AI guided customers in real time with vendor-based prompts, clarifying questions, and tax-related verifications. Bookkeepers still retained oversight.

Not every problem needs a chatbot

The initial redesign moved all categorization into a chat flow. Testing and user feedback quickly revealed issues:

- Chat can be cognitively heavy when customers want quick selections

- Because the AI agent had to load inside Bench’s microfrontend architecture, the latency was a noticeable issue

- AI’s responses were variable in length and clarity, creating uneven interactions

These insights reframed how we thought about the utility of chat. Instead of making chat the primary interface, I redesigned it as a supporting layer, best used when customers needed extra guidance or compliance checks. Everyday categorizations moved back into a selection-first flow, where speed and predictability mattered most.

With industry hype around conversational AI, it's easy to think of chatbots as the answer. Here are some alternate explorations on how AI might support categorization beyond the chatbot interface:

Similar Transactions

Before

New and catch-up customers manually categorized repeat vendors until enough data was available to auto-categorize them.

After

AI surfaced contextual groups right after a single categorization, enabling bulk action with one click. Bookkeepers saw audit trails showing “source transaction” to ensure accuracy.

Progressive disclosure builds trust

One early idea for Similar Transactions was a design that grouped similar transactions by vendor directly on the transactions screen. While theoretically valuable for vendor-based analyses (eg. calculating expenses by vendor), testing showed that if the AI grouping made mistakes, they were too visible and persistent, leaving customers feeling responsible for fixing them.

I shifted to a contextual design, where groups appeared only after a customer categorized one transaction. In this model, errors could be skipped or unchecked and then disappeared without consequence. By revealing automation only when relevant, the feature felt like a lightweight assist instead of extra work.

This progressive disclosure not only reduced cognitive load and hid AI mistakes gracefully, but also helped customers build trust in the system (and was faster to ship!).

Making automation audit-friendly

Designing internal workflows was just as important as the customer experience. For example, on the bookkeeper platform, transactions categorized through Similar Transactions were clearly flagged with their source. Bookkeepers could see which transaction provided the reasoning, ensuring tax justification and compliance.

Results

Customers noticed the difference. Here's how one customer, Patrick McKenzie, described the value Bench delivered:

This is so unbelievably more time efficient than requiring either a synchronous back and forth with my bookkeeper or, more likely, requiring me to bat emails around with a latency of several days because my job is not actually responding to questions about a $5 invoice quickly.

— Patrick McKenzie (@patio11) October 6, 2024

By the numbers

Customer impact

- 22% increase in monthly book completions, reducing delays/waiting for bookkeeper action

- 0.1% dropoff rate in Categorization flow indicating customer trust and adoption

- 67% less time categorizing, or ~20 minutes saved per session, based on customer feedback on time spent categorizing

- 95%+ accuracy maintained, preserving trust in compliance-critical process. Accuracy is tracked based on changes or lack thereof between AI categorization and bookkeeper review

Business impact

- 230,000 transactions auto-categorized in 3 months with Categorization Assistant, equivalent to ~30,000 hours of human bookkeeping or 37% of work done by specialist human teams in same period

- 55% increase in rate of customer's self-serve categorizations upon deployment of Similar Transactions, saving ~110 workdays per month

- At full adoption, customers categorized ~130,000 transactions in a month using both features with 75% requiring no internal action

Future opportunities

Although Bench's closure paused further development, I'd been keen on some next steps for improving the experience based on research of chat logs, in-app surveys, and customer interviews:

Smarter AI with industry context

Adding in the business context would make categorizations feel smarter and more intuitive: eg. a coffee shop categorizing “coffee” would be Cost of Goods Sold, rather than Office Kitchen Expense or Business Meals Expense

Training on past conversations

Using past customer comments and bookkeeper decisions to inform customer-specific model. If a customer had commented “Sarah” and bookkeeper categorized it as “Professional Services Expense”, AI could establish this pattern and auto-categorize whensever “Sarah” is input

One place for every transaction

Unifying all categorization-related actions: notes, document uploads and bookkeeper follow-ups, tax verifications, into a single thread for clarity, ease of resolution, and tracking of context for auditability